Biography

I am a postdoc researcher at Purdue university, directed by Prof. Ziran Wang and Prof. Ruqi Zhang. I received my Ph.D. degree of Computer Science at Shanghai Jiao Tong University in June 2024, supervised by Prof. Haibing Guan, and co-supervised by Prof. Yang Hua, Prof. Tao Song, and Prof. Ruhui Ma.

- Uncertainty in Autonomous Driving

- Bayesian Neural Networks

- Causal Discovery

- Adversarial Attack and Defense

- Diffusion Models

-

PhD in Computer Science and Technology, 2019.9 - 2024.6

Shanghai Jiao Tong University

-

BSc in Computer Science and Technology (IEEE Honor Class), 2015.9 - 2019.6

Shanghai Jiao Tong University

-

Electrical Engineering International Intensive program, 2017.6 - 2017.8

University of Washington

Selected Publications

In this paper, we propose a novel DNN-based method for supervised causal learning that addresses systematic biases in existing methods, with a newly designed pairwise encoder serving as the core architecture.

In this paper, we propose Information Bound as a metric of the amount of information in Bayesian neural networks. Different from mutual information on deterministic neural networks where modification of network structure or specific input data is usually necessary, Information Bound can be easily estimated on current Bayesian neural networks without any modification of network structures or training processes.

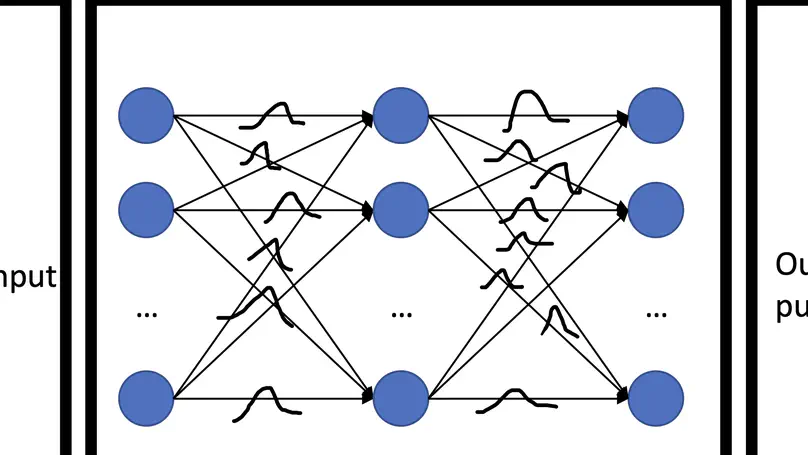

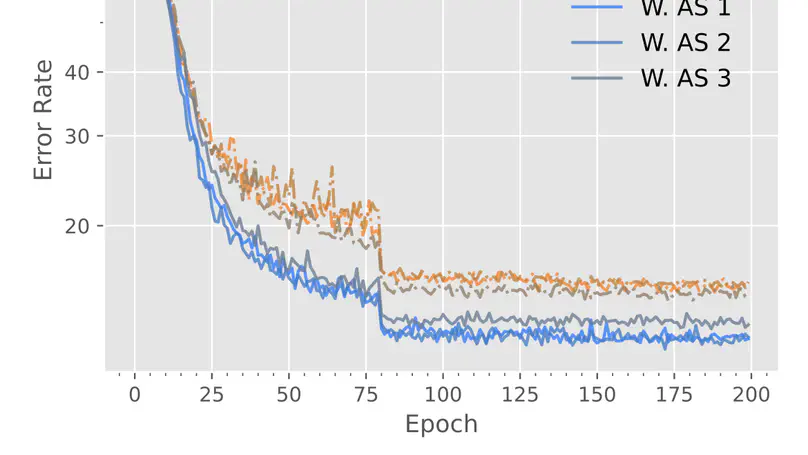

In this paper, we argue that the randomness of sampling in Bayesian neural networks causes errors in the updating of model parameters during training and some sampled models with poor performance in testing. We propose to train Bayesian neural networks with Adversarial Distribution as a theoretical solution. We further present the Adversarial Sampling method as an approximation in practice.

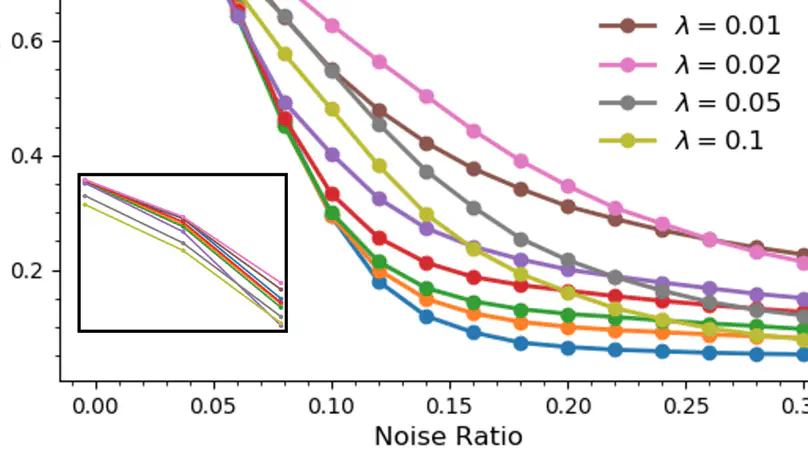

We propose Spectral Expectation Bound Regularization (SEBR) to enhance the robustness of Bayesian neural networks. Our theoretical analysis reveals that training with SEBR improves the robustness to adversarial noises. We also prove that training with SEBR can reduce the epistemic uncertainty of the model.

Publication List

Internship Experience

Mentor: Justin Ding. Responsibilities include:

- Solve supervised causal discovery problem based on transformer neural network architecture.

- Explore better solutions and submit two papers to a top conference as first author and co-author separately.

Responsibilities include:

- Reproduce factor mining techniques based on deep neural networks.

- Use Bayesian neural networks to model factor uncertainty and self-attention mechanisms to model relationships between stocks.

Academic Service

- Organizer: 4th LLVM-AD Workshop @ IEEE ITSC 2025

- Conference reviewer: NeurIPS 2025, ICCV 2025, ACMMM 2025, KDD 2025, ICML 2025, CVPR 2025, AAAI 2025, ICPR 2024, NeurIPS 2024, CVPR 2024, ICML 2024, ICLR 2023, NeurIPS 2023, EAI CollaborateCom 2022.

- Journal reviewer: IEEE Computational Intelligence Magazine, IEEE Internet of Things Journal.

Contests

Awards

- Commendable reviewer, KDD (2025)

- Stars of Tomorrow (Award of Excellent Intern of Microsoft Research Asia) (2023)

- CETC The 14TH Research Institute Glarun Scholarship (2021)

- Three Good Student of SJTU (2018, 2020, 2021)

- Excellent League Member of SJTU (2016, 2020, 2021)

- Outstanding graduate of SJTU (2019)

- Excellent Student Cadre of SJTU (2016)

Contact

- jiaru AT purdue DOT edu

- Purdue Digital Twin Lab, West Lafayette, 47906